ITiCSE 2021 Paper: CS1 Reviewer App

ITiCSE 2021 is here! And this year, I’ve got a paper with Anshul Shah, Jonathan Liu, and Susan Rodger. The first two were undergraduate students. They are now both graduated and going on to grad school to study computer science education! Anshul is going to UCSD and Jonathan to Chicago.

[Also posted on medium.]

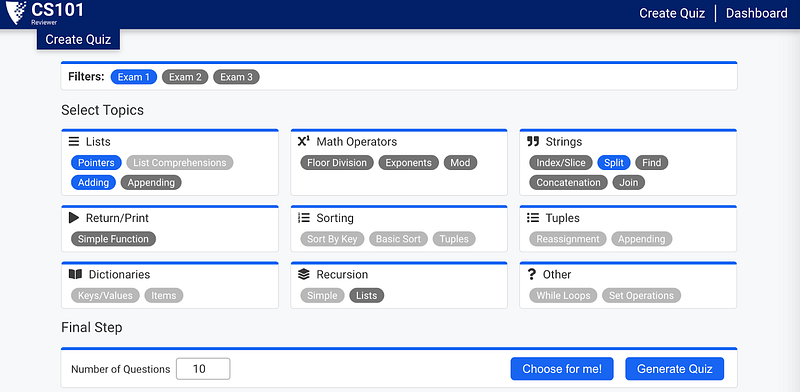

The paper is “The CS1 Reviewer App: Choose Your Own Adventure or Choose for Me!” and is a tools paper. The paper introduces an app that Anshul originally developed with a partner in their Fall 2019 database class. We’ve been developing the app ever since with help from other undergraduate students through Duke’s summer CS+ program. Jonathan started helping last Fall. Below is a screenshot, the abstract, and a discussion of changes from the last six months since we submitted the paper. You can also check out the tool yourself at https://cs-reviewer.cs.duke.edu/

Abstract

We present the CS1 Reviewer App — an online tool for an introductory Python course that allows students to solve customized problem sets on many concepts in the course. Currently, the app’s questions focus on code tracing by presenting a block of Python code and asking students to predict the output of the code. The tool tracks a student’s response history to maintain a “mastery level” that represents a student’s knowledge of a concept. We also provide an option of answering auto-generated quizzes based on the student’s mastery across concepts. As a result, the tool provides students a choice between creating their own learning experience or leveraging our question selection algorithm. The app is supported on traditional webpages and mobile devices, providing a convenient way for students to study a variety of concepts. Students in the CS1 course at Duke University used this tool during the Spring and Fall 2020* semesters. In this paper, we explore trends in usage, feedback and suggestions from students, and avenues of future work based on student experiences.

* Addendum: And Spring 2021 too now.

Updates since submission

Bayesian Knowledge Tracing (BKT). The auto-generated quizzes choose questions based on a student’s topic’s mastery score (lower mastery meant more likely to pick a question from that topic). The mastery score was initially a rolling average of a student’s performance for each topic on a quiz (details in the paper). We’ve now changed the mastery score calculation to use BKT (Catherine Yeh and Iris Howley created a visualization to help understand it too), which we believe to be a more accurate and versatile measurement of a student’s topic mastery.

Wrong answers tagged with why incorrect. All questions are machine-generated, i.e., we wrote code to write questions (like question templates with randomized mad-libs parts). Some templates could have more potential options than shown (questions currently have one correct answer and three incorrect), so it also randomly chose which ones to use. This past semester we updated the app’s backend to associate a “tag” with every wrong answer option. This tag(s) represented why the option was wrong. It’s an application of my prior work from ICER 2017. A wrong answer could have multiple tags. The intention behind it is to aggregate how the students are doing at the tag level instead of only correctness per topic.

More questions. We are also constantly writing more question templates and using them to add more questions to the app. We’ve added recursion with lists for Spring 2021 (see screenshot below). And this summer we are in the process of writing booleans, if…elif…else, and for loops. One idea is to open source the code for the question templates. We designed them to easily export into whatever format you need. My original plan included using the code for the app and generating questions for my learning management system to create quizzes for my students. If you are interested in the project, let me know in a comment or email me!

Comments

Post a Comment